Science

AI-Generated Music Evokes Stronger Emotions than Human Compositions

Generative artificial intelligence (Gen AI) is making waves in the creative sector, particularly in music. A recent study published in PLOS One examined whether AI-generated music can elicit emotional responses comparable to those produced by human composers. Conducted by the Neuro-Com Research Group from the Autonomous University of Barcelona (UAB), the research involved collaboration with the RTVE Institute in Barcelona and the University of Ljubljana in Slovenia.

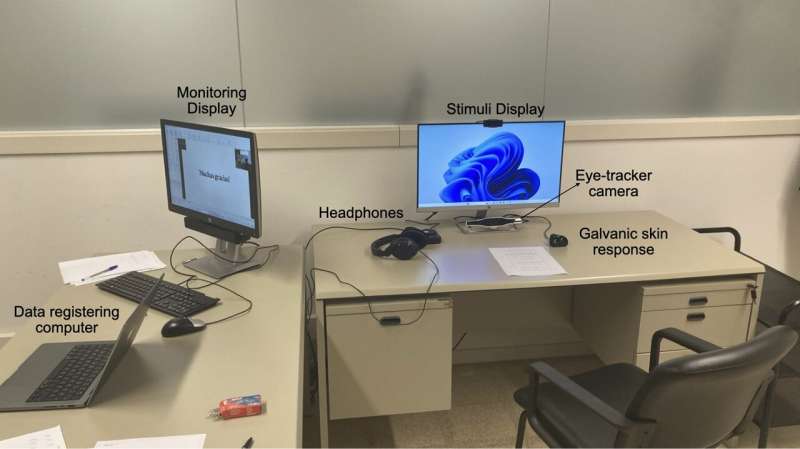

The study involved 88 participants who viewed audiovisual clips featuring identical visuals but accompanied by three distinct sound conditions: music composed by humans, AI-generated music crafted from a complex prompt, and AI-generated music created from a simpler prompt. The researchers measured participants’ physiological responses, including pupil dilation, blinking, and galvanic skin response, alongside their self-reported emotional reactions.

The findings revealed that AI-generated music triggered significantly greater pupil dilation, which indicates heightened emotional arousal among listeners. Specifically, music produced with sophisticated prompts led to more blinking and greater changes in skin response, suggesting a higher cognitive load. Interestingly, while participants perceived AI-generated music as more exciting, they found human-composed music to be more familiar.

Nikolaj Fišer, the lead author of the study, noted that “both types of AI-generated music led to greater pupil dilation and were perceived as more emotionally stimulating compared to human-created music.” This physiological response is closely associated with elevated emotional arousal levels. Fišer added that “our findings suggest that decoding the emotional information in AI-generated music may require greater cognitive effort,” highlighting a potential divergence in how human brains process AI-generated versus human-composed music.

These insights hold significant implications for the future of audiovisual production. The ability to customize music to align with visual narratives could enhance resource efficiency in creative processes. Moreover, the potential for fine-tuning emotional impact with automated tools offers new avenues for creators looking to evoke specific responses from their audiences.

This research not only expands our understanding of emotional responses to sound stimuli but also poses challenges for designing effective sensory experiences in audiovisual media. With AI’s growing role in creative fields, understanding how this technology influences emotional engagement is crucial.

For more detailed insights, refer to the study by Nikolaj Fišer et al., titled “Emotional impact of AI-generated vs. human-composed music in audiovisual media: A biometric and self-report study,” published in PLOS One on July 24, 2025. The study can be accessed through DOI: 10.1371/journal.pone.0326498.

-

Entertainment2 months ago

Entertainment2 months agoIconic 90s TV Show House Hits Market for £1.1 Million

-

Lifestyle4 months ago

Lifestyle4 months agoMilk Bank Urges Mothers to Donate for Premature Babies’ Health

-

Sports3 months ago

Sports3 months agoAlessia Russo Signs Long-Term Deal with Arsenal Ahead of WSL Season

-

Lifestyle4 months ago

Lifestyle4 months agoShoppers Flock to Discounted Neck Pillow on Amazon for Travel Comfort

-

Politics4 months ago

Politics4 months agoMuseums Body Critiques EHRC Proposals on Gender Facilities

-

Business4 months ago

Business4 months agoTrump Visits Europe: Business, Politics, or Leisure?

-

Lifestyle4 months ago

Lifestyle4 months agoJapanese Teen Sorato Shimizu Breaks U18 100m Record in 10 Seconds

-

Politics4 months ago

Politics4 months agoCouple Shares Inspiring Love Story Defying Height Stereotypes

-

World4 months ago

World4 months agoAnglian Water Raises Concerns Over Proposed AI Data Centre

-

Sports4 months ago

Sports4 months agoBournemouth Dominates Everton with 3-0 Victory in Premier League Summer Series

-

World4 months ago

World4 months agoWreckage of Missing Russian Passenger Plane Discovered in Flames

-

Lifestyle4 months ago

Lifestyle4 months agoShoppers Rave About Roman’s £42 Midi Dress, Calling It ‘Elegant’